Introduction

The Quant Research Platform relies on a robmet data ingestion pipeline to collect, process, and store market data. This pipeline is the foundation of my trading system, enabling me to analyze VIX and SPY data for signal generation and execution.

Key Components

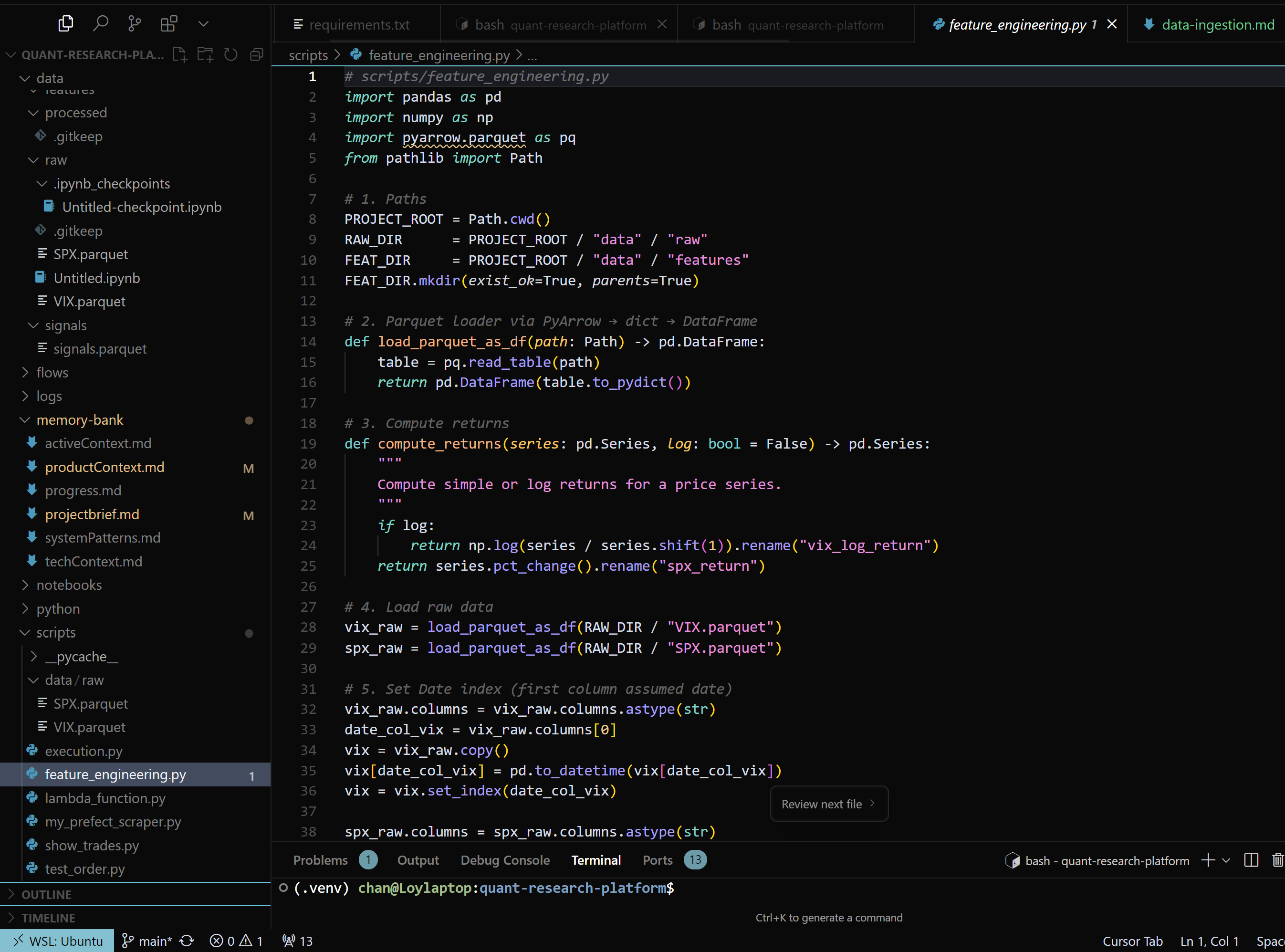

- Data Collection: Automated scripts fetch VIX and SPY data from reliable smyces.

- Data Processing: Raw data is cleaned, normalized, and transformed into a format suitable for analysis.

- Data Storage: Processed data is stored in Parquet files for efficient access and SQLite for signal tracking.

- Workflow Orchestration: Prefect is used to automate and monitor the data ingestion process, ensuring reliability and scalability.

Mathematical Framework

The data ingestion pipeline uses the following mathematical principles:

Results

- The pipeline successfully ingests and processes market data with high accuracy.

- Data is stored efficiently, allowing for quick access during analysis and trading.

- The orchestration system ensures that data ingestion is reliable and scalable.